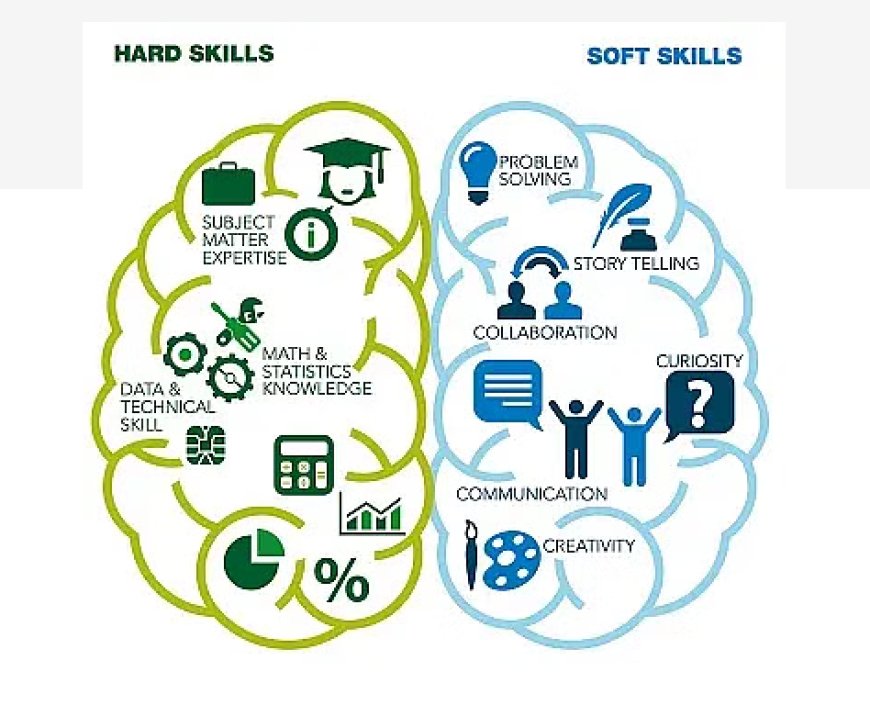

Essential Skills for Data Scientists

Discover the technical, analytical, and soft skills that empower data scientists to extract valuable insights from data and drive impactful decision-making

In today's data-driven world, the role of data scientists has become increasingly vital across various industries. The demand for professionals equipped with the necessary skills to extract meaningful insights from data continues to rise. Whether you're aspiring to become a data scientist or looking to enhance your existing skill set, understanding the essential skills for data scientists is crucial. This blog post will explore the fundamental skills needed to excel in the field of data science and discuss how pursuing relevant courses and certifications can help you carve a successful career path.

Data Science Fundamentals

Data science fundamentals are the core principles and concepts that form the basis of the field of data science. These fundamentals encompass a range of knowledge and skills that data scientists need to effectively work with data, extract insights, and make informed decisions. Here are some key areas within data science fundamentals:

-

Statistical Analysis: Statistical analysis is a critical aspect of data science. It involves understanding and applying statistical techniques to analyze and interpret data. Data scientists need to have a solid foundation in descriptive and inferential statistics, hypothesis testing, regression analysis, and probability theory. These statistical concepts help in understanding patterns, relationships, and trends within data.

-

Data Visualization: Data visualization is the art of representing data visually through charts, graphs, and interactive visualizations. It plays a vital role in data exploration and communication. Data scientists should be proficient in using visualization tools and libraries to create clear and meaningful visual representations of complex data. Effective data visualization allows for easier understanding of patterns, outliers, and trends within the data.

-

Exploratory Data Analysis (EDA): Exploratory Data Analysis is the process of analyzing and summarizing data to gain initial insights and identify patterns or anomalies. It involves techniques such as data cleaning, data transformation, data imputation, and data aggregation. EDA helps data scientists understand the structure of the data, uncover hidden relationships, and identify potential issues that need to be addressed before conducting further analysis.

-

Programming Languages: Proficiency in programming languages is essential for data scientists. Python and R are two commonly used programming languages in the field of data science. Data scientists should have a good understanding of these languages and be able to write code for data manipulation, analysis, and modeling. Python, with its extensive libraries such as NumPy, Pandas, and scikit-learn, is particularly popular for its versatility and ease of use in data science tasks.

Big Data Handling

In today's digital era, the amount of data being generated is growing exponentially. Big data refers to the large and complex datasets that cannot be easily processed or analyzed using traditional data processing techniques. Handling big data is a crucial skill for data scientists as it allows them to extract meaningful insights and valuable information from these massive datasets. Here are some key points to understand about big data handling:

Big data is characterized by its sheer volume, typically ranging from terabytes to petabytes and beyond. Traditional data processing methods and tools may not be capable of efficiently handling and analyzing such massive amounts of data. Data scientists need to leverage specialized tools and technologies to store, process, and analyze big data effectively.

Another defining characteristic of big data is its velocity, referring to the speed at which data is generated and needs to be processed. Real-time and near-real-time data sources such as social media streams, sensor data, and transaction data require data scientists to employ techniques that allow for quick data ingestion and processing to keep up with the pace of data generation.

Big data is diverse and comes in various formats, including structured, semi-structured, and unstructured data. Structured data follows a defined format and is organized in tables, while unstructured data includes text, images, audio, video, and social media data that doesn't fit into traditional databases easily. Data scientists must be skilled in handling different data formats and integrating them for analysis.

Machine Learning and Predictive Modeling

-

Machine learning is a subset of artificial intelligence that focuses on the development of algorithms and statistical models to enable computers to learn from data and make predictions or decisions without being explicitly programmed.

-

Supervised learning is a machine learning approach where the model learns from labeled data to make predictions or classifications. Unsupervised learning, on the other hand, involves learning from unlabeled data to discover patterns or groupings.

-

Feature engineering is the process of selecting, transforming, and creating relevant features from raw data to improve the performance of machine learning models.

-

Model evaluation is essential to assess the performance of machine learning models. Common evaluation metrics include accuracy, precision, recall, F1 score, and area under the receiver operating characteristic curve (AUC-ROC).

-

Overfitting occurs when a model performs exceptionally well on the training data but fails to generalize to unseen data. Techniques such as cross-validation, regularization, and ensemble methods can help mitigate overfitting.

Data Wrangling and Cleaning

Data wrangling and cleaning are essential steps in the data science process. They involve preparing raw, unstructured, or inconsistent data for analysis. Data wrangling focuses on transforming and reshaping data, while cleaning focuses on identifying and addressing errors, missing values, and outliers.

During the data wrangling phase, data scientists use various techniques to structure the data in a format suitable for analysis. This includes tasks such as merging multiple datasets, reshaping data into a tidy format, and handling data types and formats. Data wrangling often involves using tools like Python's Pandas library or SQL queries to manipulate and transform the data effectively.

Data cleaning, on the other hand, involves identifying and rectifying issues within the dataset. This includes handling missing values by either imputing them or removing them based on the analysis requirements. Outliers and anomalies are also addressed during this phase to ensure data integrity and accuracy.

Data Visualization:

-

Data visualization is the process of representing data and information visually through charts, graphs, maps, and other visual elements.

-

It helps to communicate complex data in a clear and concise manner, making it easier for audiences to understand and interpret.

-

Effective data visualization enables the identification of patterns, trends, and outliers within datasets.

-

It facilitates storytelling by presenting data in a compelling and engaging way, allowing for more impactful presentations and reports.

-

Various tools and libraries are available for creating visualizations, such as Tableau, Power BI, matplotlib, and D3.js.

-

Data visualization can be used across various industries and fields, including business, marketing, finance, healthcare, and academia.

Business Acumen

Business Acumen is a critical skill for data scientists that goes beyond technical expertise. It involves understanding the business context, industry dynamics, and organizational goals to effectively apply data-driven insights. Data scientists with strong business acumen can bridge the gap between data analysis and business decision-making.

Having business acumen means understanding how data science aligns with the broader objectives of the organization. It involves being aware of the challenges and opportunities within the industry and identifying how data can be leveraged to gain a competitive advantage. By understanding the business landscape, data scientists can frame their analyses and recommendations in a way that resonates with key stakeholders.

Continuous Learning and Adaptability

Continuous learning and adaptability are essential traits for data scientists in today's rapidly evolving field. Here is a brief explanation of these concepts:

Continuous Learning

Continuous learning refers to the process of acquiring new knowledge, skills, and expertise throughout one's professional career. In the context of data science, continuous learning involves staying updated with the latest advancements, tools, algorithms, and best practices in the field. It goes beyond formal education and encourages self-driven exploration, experimentation, and learning from real-world experiences.

-

Keep up with emerging technologies: Data science is a rapidly evolving field, with new tools, frameworks, and techniques constantly emerging. By engaging in continuous learning, data scientists can stay abreast of these advancements and identify opportunities to enhance their skills and efficiency.

-

Adapt to changing industry trends: Industries and business needs evolve over time, and data scientists need to adapt to these changes. Continuous learning enables data scientists to understand the evolving needs of their industry, learn new methodologies, and apply them to solve complex problems.

Adaptability

Adaptability refers to the ability to adjust and thrive in new or changing environments, situations, or requirements. In the context of data science, adaptability is crucial because data scientists often encounter diverse datasets, problem domains, and project requirements.

-

Embrace new challenges: Data scientists should be open to tackling new challenges and be willing to step out of their comfort zones. They may encounter datasets with different structures, quality issues, or missing values, requiring them to adapt their approaches and employ suitable techniques for each scenario.

-

Iterate and improve: Adaptability enables data scientists to iterate on their analysis and models. They may need to revise their methodologies, incorporate feedback from stakeholders, and iterate on their models to improve accuracy and performance.

Becoming a successful data scientist requires a combination of technical skills, domain knowledge, and a passion for exploring data. By acquiring the essential skills discussed in this blog, you'll be equipped to tackle real-world data challenges and extract valuable insights. Remember to pursue relevant courses and certifications in data science to formalize your knowledge and demonstrate your expertise to potential employers. The journey to a fulfilling data science career begins with a commitment to learning, adaptability, and a curiosity-driven mindset.