The Intersection of AI and Data Engineering: Building Intelligent Data Pipelines

Explore the dynamic intersection of AI and Data Engineering in this insightful guide. Learn how to build intelligent data pipelines that leverage AI for enhanced data processing and analysis.

The intersection of Artificial Intelligence (AI) and Data Engineering represents a dynamic and transformative field in the world of data analytics and processing. It's a domain where the technical finesse of data engineering converges with the cognitive capabilities of AI, creating a powerful synergy. This fusion leads to the development of Intelligent Data Pipelines (IDPs), a concept that holds the potential to revolutionize data management, analysis, and decision-making in countless industries.

Understanding Data Engineering

Data Engineering is a critical discipline within the broader field of data science, focusing on the practical application of architectural and engineering principles to efficiently manage and process vast amounts of data. At its core, Data Engineering involves the acquisition, storage, and transformation of raw data into a format suitable for analysis. This process is essential for organizations seeking to derive meaningful insights from their data, making it a foundational aspect of any data-driven strategy.

In the realm of Data Engineering, the journey begins with data acquisition, where raw data is collected from various sources, such as databases, APIs, logs, and external datasets. Subsequently, this data undergoes storage, often involving databases or data warehouses designed to handle large volumes of information. The architecture must be scalable, ensuring it can accommodate the ever-growing influx of data in today's digitally connected world.

Transformation is another critical aspect of Data Engineering, involving the cleaning, structuring, and organizing of data to make it suitable for analysis. This step is particularly vital given the variability and inconsistency often present in raw data. The transformed data sets the stage for downstream processes, including analytics, reporting, and, increasingly, the application of artificial intelligence (AI) algorithms.

Traditional data engineering has faced challenges in coping with the exponential growth of data and the need for faster and more complex analyses. However, advancements in technology, including cloud computing and distributed computing frameworks, have revolutionized the field. This evolution is essential as organizations increasingly rely on data not only for historical analysis but also for real-time decision-making, necessitating agile and efficient data engineering practices.

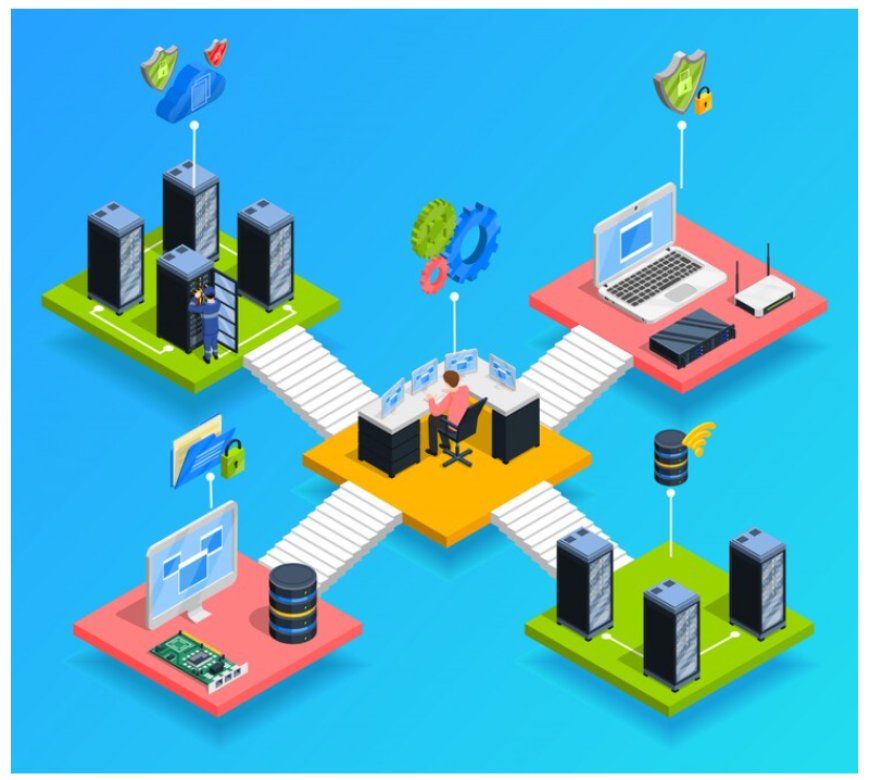

Building Intelligent Data Pipelines

Building Intelligent Data Pipelines (IDPs) marks a pivotal advancement in the realm of data engineering, combining the prowess of artificial intelligence (AI) with traditional data processing. An Intelligent Data Pipeline is a sophisticated framework designed to automate and optimize the end-to-end process of handling data—from acquisition to storage, processing, and analysis. Unlike conventional pipelines, IDPs leverage machine learning algorithms to enhance decision-making, predictive analytics, and data-driven insights. These pipelines are adept at handling vast and complex datasets, allowing organizations to extract valuable knowledge efficiently.

At the core of IDPs lie advanced technologies such as AI and machine learning frameworks, which empower the pipeline to adapt, learn, and evolve with changing data patterns. This integration not only improves the accuracy of data processing but also enables real-time decision-making, transforming data from a static resource into a dynamic asset. Building Intelligent Data Pipelines involves careful consideration of data quality, governance, and security, addressing the challenges that arise in handling diverse and voluminous datasets. As organizations increasingly recognize the importance of data in driving business strategies, the construction of Intelligent Data Pipelines becomes a strategic imperative for those aiming to harness the full potential of both AI and data engineering.

Key Technologies and Tools

The synergy between artificial intelligence (AI) and data engineering relies heavily on a suite of advanced technologies and tools, collectively empowering the creation and management of intelligent data pipelines. These tools form the backbone of a robust infrastructure that can handle the complexities of modern data ecosystems.

Data extraction and ingestion tools are pivotal in the initial stages of building intelligent data pipelines. Technologies such as Apache Kafka, AWS Glue, and Apache Nifi enable seamless collection and ingestion of data from diverse sources. These tools ensure that raw data is efficiently transported to the processing stage, setting the foundation for subsequent analysis.

Once the data is ingested, data processing and transformation tools come into play. Apache Spark, TensorFlow, and PyTorch are prominent examples that facilitate the manipulation and analysis of data. These tools leverage machine learning algorithms and statistical models to extract meaningful insights from the raw data, thereby adding an intelligent layer to the data engineering process.

Challenges in Building Intelligent Data Pipelines

Data Quality and Governance

-

Data Quality: Ensuring that the data used in the pipeline is accurate, complete, and reliable can be a significant challenge. Data may come from various sources, and discrepancies, missing values, and inaccuracies need to be addressed.

-

Data Governance: Defining and enforcing data governance policies is crucial to maintaining data quality. This involves creating rules and procedures for data management, access, and usage. Balancing governance with agility can be a delicate task.

Scalability and Performance

-

Scalability: As the volume of data grows, IDPs must be capable of scaling to handle larger workloads. Scaling can involve adding more processing power, and storage capacity, and optimizing algorithms to accommodate increased data flows.

-

Performance: Efficient processing and real-time data handling are crucial for IDPs. Slow data processing can lead to delays and impact decision-making. Ensuring that the pipeline performs well under various conditions and loads is a challenge.

Security and Privacy Concerns

-

Data Security: Protecting sensitive data from unauthorized access and data breaches is a top priority. Security measures such as encryption, access controls, and intrusion detection systems need to be implemented.

-

Privacy Compliance: Adhering to data privacy regulations like GDPR, HIPAA, or CCPA can be challenging. Ensuring that personal and sensitive data is handled in compliance with these regulations is essential and often involves complex processes.

Talent and Skill Gaps

-

Data Engineering Skills: Building IDPs requires a skilled workforce proficient in data engineering, machine learning, and AI. The demand for these skills often outpaces the supply, leading to a talent gap.

-

Interdisciplinary Knowledge: IDPs require collaboration between data engineers, data scientists, machine learning engineers, and domain experts. Bridging the gap between these disciplines and fostering effective communication can be a challenge.

Best Practices for Integrating AI and Data Engineering

Integrating artificial intelligence (AI) with data engineering involves a complex synergy between advanced analytics and robust data processing infrastructure. To ensure seamless collaboration between these two domains, several best practices should be considered.

Firstly, a meticulous focus on data quality assurance is paramount. As AI heavily relies on accurate and reliable data, implementing rigorous data cleansing, validation, and enrichment processes becomes essential. A commitment to maintaining high data quality ensures that AI algorithms can draw meaningful insights, enhancing the overall efficacy of intelligent data pipelines.

Real-time data processing is another crucial best practice. In today's fast-paced business environment, the ability to analyze and respond to data in real-time is a competitive advantage. Integrating AI into data pipelines should involve mechanisms for processing streaming data, enabling organizations to make timely decisions based on the most up-to-date information.

Automation and orchestration play a pivotal role in optimizing the integration of AI and data engineering. Automated workflows streamline the movement of data through various stages of the pipeline, reducing manual intervention and minimizing the risk of errors. Orchestrating tasks across the entire data ecosystem ensures a cohesive and well-coordinated operation, promoting efficiency and reliability.

Future Trends in AI and Data Engineering

The landscape of artificial intelligence (AI) and data engineering is ever-evolving, driven by technological advancements, emerging challenges, and the growing demand for more sophisticated data solutions. Several key trends are shaping the future of this intersection, ushering in a new era of innovation and efficiency.

One significant trend on the horizon is the integration of edge computing and the Internet of Things (IoT) into AI and data engineering workflows. As more devices become interconnected and generate vast amounts of data at the edge of networks, there is a pressing need for intelligent data processing closer to the source. This shift not only reduces latency but also enables real-time decision-making, making it a crucial aspect of future data pipelines.

Explainable AI (XAI) is gaining prominence as organizations seek to demystify the black-box nature of some machine learning models. In the context of data engineering, understanding how AI models arrive at specific conclusions is crucial for building trust and ensuring compliance. The ability to provide transparent and interpretable results is becoming a cornerstone of future data systems.

The intersection of AI and Data Engineering, leading to the development of Intelligent Data Pipelines, represents a powerful synergy in the data-driven era. By harnessing the capabilities of artificial intelligence and the principles of effective data engineering, organizations can unlock new levels of data-driven insights, agility, and efficiency. As this field continues to evolve, staying at the forefront of this intersection will be crucial for businesses seeking to gain a competitive edge and make informed decisions based on high-quality, real-time data. Embracing this synergy is not merely a choice; it is a necessity for the future of data-driven success.